Managing AI Risks - How to Protect Sensitive Information with Microsoft Purview

Your users are using AI whether you know about it or not. Data security and permissions in the environment are essential to protect your compliance status, customer trust, and sensitive information.

In a recent webinar, David Trum, Senior Solutions Strategist, and Todd Brink, Information Security Senior Consultant at ProArch, discussed the challenges of managing AI risks and protecting sensitive information using Microsoft Purview.

Keep reading for a summary of the discussion and click here to watch the full webinar.

New Data Challenges with AI Advancements

GenAI and Copilot are powerful tools for enhancing productivity, but they pose new risks due to the ways people might input data.

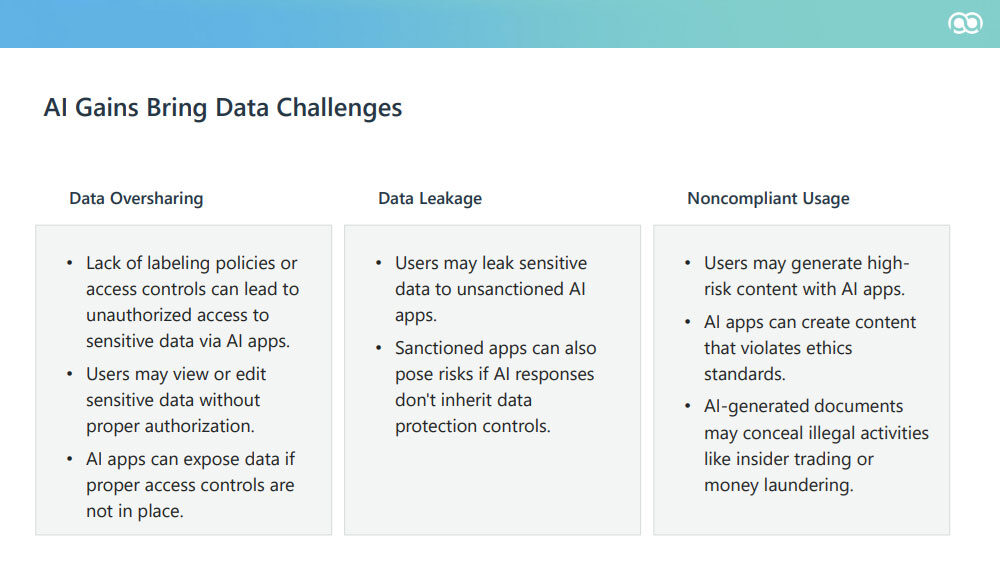

As David Trum pointed out: "Generative AI is really a new way of searching for data. All you have to do is ask the right question. Now, there are new risks of data oversharing, data leakage and noncompliant usage.”

To address these risks, we first need to recognize them. Oversharing occurs due to a lack of policies and access controls in place, allowing people to see stuff without proper authorization. Unsanctioned AI can mishandle data, and even sanctioned apps are risky without proper data protection. Additionally, AI can be misused for harmful purposes if not monitored.

Microsoft Purview: Comprehensive Data Security

David stated that “Microsoft Purview is a large product, part of Microsoft E5, and there's lots of different areas that it covers from security to helping you apply access controls and governance all the way down to measuring risk and compliance against specific controls." Microsoft Purview offers a robust suite of tools for managing data security across an organization.

Key features of Purview include:

- Access auditing and control

- Sensitivity labeling

- Data Loss Prevention (DLP) policies

- Cloud application security

- AI-powered risk assessment

On managing Microsoft purview policies, Todd Brink emphasized the importance of a collaborative approach: "We'll often see with clients that we work with, a designated IT guy, but we'll also work with legal or privacy in conjunction because everybody kind of has a stake on how these policies are created. It's not just an IT thing anymore."

Strategies for Protecting Sensitive Information

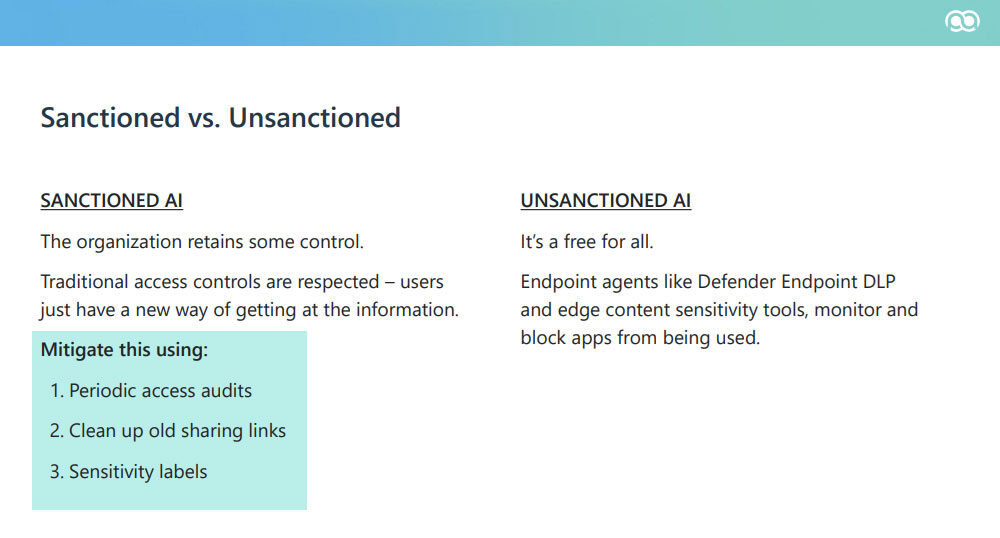

Trum emphasized the importance of sanctioned AI: “The sanctioned AI is good, and the organization retains some control, and all the traditional access controls are respected. But what you need to do is do periodic access audits to ensure the permissions are set appropriately. And it's really obvious that we need to clean up old sharing links.”

Trum outlined several strategies for protecting sensitive information using Microsoft Purview:

- Conduct periodic access audits to ensure appropriate permissions.

- Implement sensitivity labels to add an extra layer of security.

- Use Microsoft Defender to monitor and block unsanctioned cloud applications.

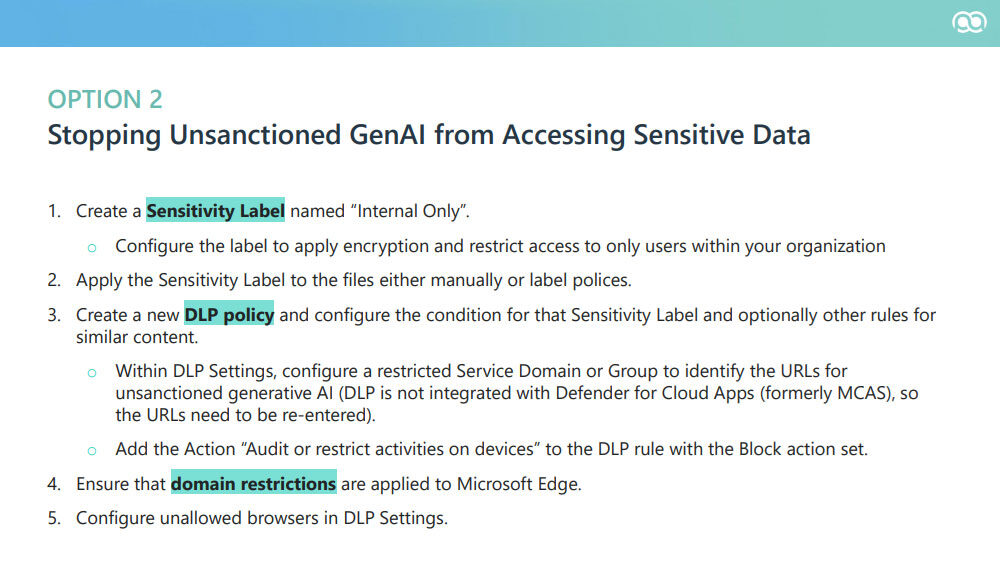

- Create DLP policies to restrict access to sensitive content.

- Leverage the AI hub (in preview) for visibility into AI usage and risk assessment.

We can create a DLP policy to look for that sensitivity label. Or in the case of source code, it's pretty straightforward to create a sensitive information type that can look for, like, company-specific libraries, for instance.

What’s Next?

Managing AI risks and protecting sensitive information requires a comprehensive strategy that leverages the full capabilities of Microsoft Purview. If your organization is concerned about managing AI risks and protecting sensitive information, our experts can guide you in setting up effective policies and controls tailored to your specific needs.

Our data protection services can help you navigate the complexities of AI and data security, ensuring your organization's sensitive information is well-protected. For more insights, download the full recording and slide deck of the webinar and learn more about our services at ProArch.

Assistant Manager Content Parijat helps shape ProArch’s brand voice, turning complex tech concepts into clear, engaging content. Whether it’s blogs, email campaigns, or social media, she focuses on making ProArch’s messaging accessible and impactful. With experience in Oracle, Cloud, and Salesforce, she blends creativity with technical know-how to connect with the right audience. Beyond writing, she ensures consistency in how ProArch tells its story—helping the brand stay strong, authentic, and aligned with its vision.