Webinar Recap: Evaluating Generative AI Applications for Responsible AI Standards

How do you ensure your generative AI applications are ethical, reliable, and aligned with business goals?

The key is asking the right questions and looking at 3 things:

- Are the answers accurate and complete?

- Do they match the context of the question?

- Are they relevant and clear—without hallucinations?

In our recent webinar, Viswanath Pula, AVP – Solution Architect & Customer Service and AI expert at ProArch, explored the steps for evaluating Gen AI applications and the framework ProArch utilizes to help organizations use generative AI responsibly.

Read on for the key takeaways or watch the full webinar here.

Risks of Overlooking Gen AI Testing

“Generative AI isn’t just a tool—it’s a responsibility,” Viswa emphasized. He explained that rushing into AI adoption without robust testing exposes organizations to significant risks.

For instance, he outlined how can lead to discriminatory outcomes, while privacy concerns around sensitive user data can harm trust and compliance. Moreover, Viswa noted that security vulnerabilities, inaccurate predictions, and legal risks aren’t just technical challenges—they’re business risks that can lead to reputational damage and financial loss.

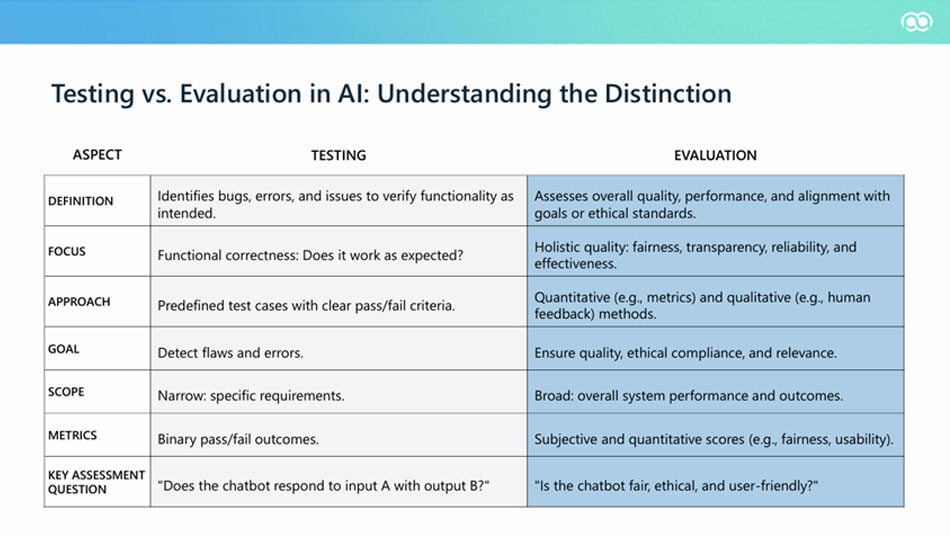

Generative AI Testing Is a Whole New Game

Comparing traditional software testing with generative AI testing, Viswa pointed out a crucial distinction: “With traditional testing, you know what to expect. But generative AI operates like a black box—it’s not always clear how it reaches its decisions.”

He elaborated with examples: traditional testing focuses on specific inputs and outputs—“Does the chatbot respond to A with B?”—while generative AI evaluation goes deeper, asking: Is the response empathetic, user-friendly, and ethical?

Viswa brought this concept to life with a scenario involving chatbot interactions. “Imagine a user asking for return policy details. A traditional test might check for a straightforward answer, but in Gen AI evaluation, we assess whether the response is empathetic and contextually appropriate.” He explained how this nuanced approach ensures better user experience and trust.

How to Ensure Your Gen AI App Meets Responsible AI Standards

Viswanath Pula explained that evaluating generative AI systems is about assessing key qualities like faithfulness, relevancy, and context precision. For instance, when asked, “Where and when was Einstein born?” a high-faithful response would provide both details accurately.

Low-faithful responses or hallucinations, where AI fabricates information, highlight the importance of rigorous evaluation to ensure accurate and ethical AI outputs.

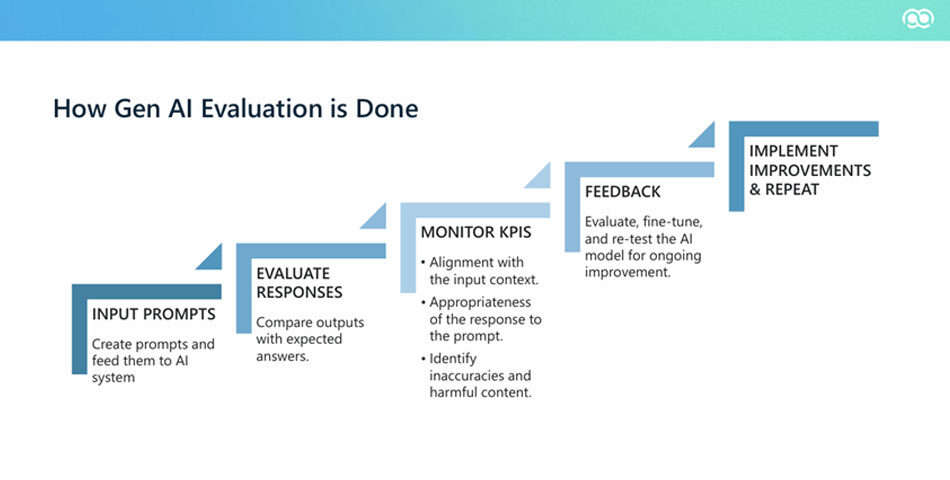

To meet responsible AI standards, Viswa outlined a clear process during the webinar:

1. Define Evaluation Criteria:

- Establish performance, accuracy, and safety thresholds.

2. Generate Prompts:

- Create a list of prompts to test the AI system.

- Include diverse and complex queries to evaluate different aspects of the AI’s performance.

3. Simulate User Interactions:

- Use tools like Selenium to automate the input of prompts and capture responses.

- Ensure the simulation mimics real user interactions as closely as possible.

4. Evaluate Responses:

- Compare the AI’s responses to expected outputs.

- Assess accuracy, relevance, and appropriateness while identifying biases or harmful content.

5. Monitor Key Performance Indicators (KPIs):

- Track metrics such as hallucination, toxicity, and bias.

- Ensure performance meets predefined thresholds for these metrics.

6. Provide Feedback and Fine-Tune:

- Share feedback with the development team.

- Adjust the AI system, knowledge base, or model based on feedback.

7. Retest and Validate:

- Rerun evaluations to confirm issues are resolved.

- Validate the AI meets quality and compliance standards.

ProArch: Your AI Partner

Navigating the world of generative AI can be complex, but with ProArch’s AI consulting services, you can ensure your systems are effective and responsible.

As Viswanath Pula shared, “Generative AI can unlock immense value—but only if it’s built on a foundation of trust, accountability, and quality.”

Whether you’re starting fresh or refining existing AI systems, ProArch’s team is here to guide you through every step—from identifying use cases to ensuring ethical implementation. Explore the full webinar recording for more insights or contact ProArch for guidance on your AI strategy.

Assistant Manager Content Parijat helps shape ProArch’s brand voice, turning complex tech concepts into clear, engaging content. Whether it’s blogs, email campaigns, or social media, she focuses on making ProArch’s messaging accessible and impactful. With experience in Oracle, Cloud, and Salesforce, she blends creativity with technical know-how to connect with the right audience. Beyond writing, she ensures consistency in how ProArch tells its story—helping the brand stay strong, authentic, and aligned with its vision.