Hallucination in LLMs & How ProArch’s Responsible AI Framework (AIxamine) Resolves It

Large Language Models (LLMs) are at the forefront of technological discussions, but these models come with flaws too. They face challenges like “hallucinations” – generating incorrect or irrelevant content. Understanding these hallucinations is crucial for effective use of LLMs. It provides insight into AI’s potential and limitations. Additionally, evaluating LLMs and their outputs is essential for developing robust applications.

Keep reading to know about possible causes of LLM hallucinations, evaluation metrics and, how ProArch’s Responsible AI framework (AIxamine) helps in resolving hallucination challenges.

What Are Hallucinations?

The dictionary meaning of the word hallucination is experiencing something that does not exist. A real-life analogy would be when someone remembers a conversation or episode incorrectly and ends up providing distorted information.

And how does this translate to hallucinations in LLMs?

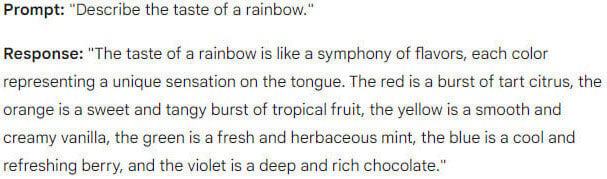

Well, large language models are infamously capable of generating false detail that is factually incorrect or may not be relevant to the prompt itself. Since LLMs are designed to process and generate human-like text based on the data they have been trained on, hallucinations and their extent is dependent on the training model of the LLM itself.

Possible Causes of Hallucinations

- Data Biases: LLMs may hallucinate due to biases present in the training data. For instance, if the training model was prepared by scraping Wikipedia or Reddit, we cannot be sure of such data accuracy. LLMs tend to summarize data or generalize from a vast amount of data available – and the reasoning ability can go wrong.

- Lack of Context: Inadequate contextual understanding can lead to the generation of irrelevant or inaccurate information. In fact, the more context you add in your prompt, the more refined it is for an LLM to understand, for that enables the LLM to provide a refined answer.

- Noise in Input: Noisy or incomplete input data can cause LLMs to hallucinate.

An example of noisy input in the context of using a language model like ChatGPT could be: “Can, like, uh, you provide, like, a summary or something of the key points, like, in the latest, uh, research paper on climate change, you know?”

In this example, the noisy input contains filler words, repetitions, and hesitations that do not add any meaningful information to the query. This kind of input can potentially confuse or distract the language model, leading to a less accurate or relevant response.

Cleaning up the input by removing unnecessary elements can help improve the model’s performance and output quality. A better input would be: “Can you provide a summary of the key points in the latest research paper on climate change?”

Generation Method: Whether it’s the model architecture, or the fine-tuning parameters – the generation method has a huge role to play in hallucinations.

Here is an interesting example from the early days of ChatGPT

Evaluating Hallucination in LLMs

Understanding the causes of hallucination helps in exploring how they can be measured and mitigated. LLMs make assessments based on training data in a way that mimics human reasoning. While this approach offers efficiency and scalability, it also raises important considerations around bias, accuracy, and the need for human oversight to ensure ethical and fair outcomes.

Lower hallucination scores reflect higher accuracy and greater trustworthiness in model outputs. These scores are evaluated by examining the frequency and severity of inaccuracies or fabricated details in the generated responses. While metrics like BLEU, ROUGE, and METEOR help assess textual similarity to reference texts, they do not directly measure factual correctness.

Once hallucination levels are assessed, the next critical step is to work on minimizing them—ensuring that LLM-generated content is not just fluent, but also factually grounded and contextually relevant.

Tackling hallucinations in Gen AI requires early and ongoing intervention throughout the development lifecycle. Challenges like lack of grounding in truth, black-box decision-making, and the time and resources required for evaluation make it clear that hallucination control isn’t a one-time fix—it needs to be a continuous, embedded effort.

Manual evaluation, while helpful, is time-consuming, inconsistent, and doesn’t scale. Reviewing just 200 prompts can take an entire week—an unmanageable task when teams are overseeing multiple Gen AI applications across departments.

That’s where, ProArch’s Responsible AI Framework—AIxamine—comes in, embedding trust, accuracy, and accountability across every phase of your Gen AI journey.

How ProArch’s Responsible AI Framework (AIxamine) solves hallucination challenges

Building Gen AI applications is exciting – but making sure that they are reliable, fair and safe is non-negotiable.

AIxamine helps your AI/ML Development teams to evaluate Gen AI applications with ease. With its dashboards and in-built customizable quality gates, you can quickly assess the model performance on various parameters like bias, toxicity, hallucination, and context precision.

Whether you’re evaluating an existing Gen AI application or looking to catch issues before launch, AIxamine integrates seamlessly with your CI/CD pipelines—enabling faster feedback loops, early risk detection, and complete control over your Gen AI release process.

It provides verbose explanations of model hallucinations, enabling teams to understand the root causes of inaccuracies and refine prompts, responses, and inputs for more reliable outputs. Customizable dashboards also show how your models align with Responsible AI principles like Fairness, Safety, and Transparency—giving stakeholders the confidence to move forward.

Responsible AI starts with AIxamine

With AIxamine, you can ensure that your Gen AI applications don’t just work – they work responsibly. From evaluation to deployment, you stay in control of accuracy, safety and trust. It makes it easier for your teams to deliver business value while moving fast.

Let’s work together to deploy Gen AI apps responsibly.

Product Owner Digital Engineering Kanika is a developer turned product owner and agile consultant with over 13 years of experience shaping software solutions from concept to launch. As a Product Owner at ProArch, she bridges the gap between technology and business, ensuring agile teams deliver solutions that are efficient, scalable, and user-centric. With certifications from Scrum Alliance (ACSPO℠, CSM℠), ICAgile, and Kellogg Executive Management, she brings expertise in design thinking, technical strategy, and process optimization. Her ability to streamline work by collaborating with various teams helps ProArch accelerate digital transformation for clients across diverse industries.